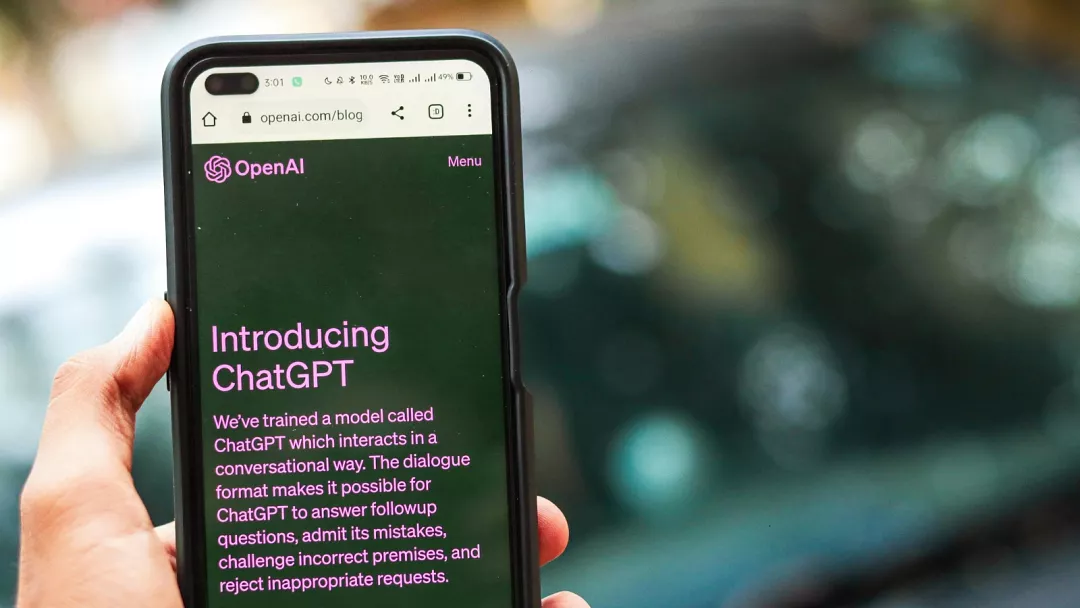

OpenAI is under scrutiny following a privacy complaint filed by the Austrian advocacy group Noyb on behalf of Arve Hjalmar Holmen, a Norwegian individual. The complaint alleges that OpenAI’s AI chatbot, ChatGPT, falsely claimed that Holmen was convicted of murdering his two sons and attempting to murder a third, blending accurate personal details with fabricated criminal accusations.

Holmen discovered the misinformation when he queried ChatGPT about himself, receiving a response that included both correct information—such as his hometown and the number and gender of his children—and the erroneous claim of his involvement in heinous crimes. This incident has raised significant concerns about the reliability of AI-generated information and the potential for reputational harm.

Noyb’s complaint, filed with the Norwegian Data Protection Authority, asserts that OpenAI violated the European Union’s General Data Protection Regulation (GDPR), specifically Article 5(1)(d), which mandates that personal data must be accurate and kept up to date. The advocacy group is urging the authority to order OpenAI to delete the defamatory content, adjust its AI model to prevent similar inaccuracies, and impose an administrative fine to deter future violations.

This case underscores the broader challenges associated with AI “hallucinations,” where models generate incorrect or misleading information. Despite disclaimers about potential inaccuracies, the blending of factual and false details can lead to significant reputational damage. Noyb emphasizes that merely displaying a disclaimer about possible errors is insufficient and that AI companies must ensure compliance with data protection laws.

OpenAI has yet to comment on this specific incident. The outcome of this complaint could have far-reaching implications for AI developers, highlighting the necessity for robust safeguards to prevent the dissemination of false information and to protect individuals’ rights under data protection regulations.